SAS’s Best Subset Selection by Mallows's Cp is actually Stepwise?

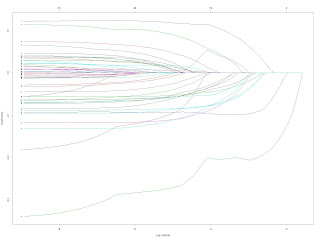

SAS’s Best Subset is actually Stepwise? I answered this question in my next post: Best subset selection uses the branch and bound algorithm to speed up The original post explaining my own confusion: I suspect that the function regsubsets from R library leaps does go through all the possible combinations, and it takes hours for 50 variables. Facing a large number of variables SAS just uses stepwise selection even though the code asks for best subset by adding selection = cp to the model part of proc reg . This is my suspicion, I don’t know whether maybe it never scans all the combinations. I tested with the same dataset from the last post Without cross-validation, I used all the 598 observations to run regsubsets : # R: nv_max <- 25 # up-limit of number of variables to test fit_s <- regsubsets(Share_Temporary~., mydata4, really.big = T , nbest= 1 , nvmax = nv_max ) As we can se...