How to batch analysis multiple data files in R

Step-by-step instructions on how to import & process multiple data files in R (based on the accuracy analysis of 200 cities)

- Technical details

- Challenges

- Visualization of results on the map

I have some data collected for each city, each generated as one data file. And I need to run same analysis for all the 200 data files.

I will go through the whole process in one post. It is not too complicated, which I suppose is why most posts I found online only address part of the issue.

Summary of the process:

Summary of the process:

1. Write a program for a single data file that can do all the analysis correctly.

2. Combine functions to get a final function which will produce a single line of output for all the results I need.

3. Use functional (lappy) or a loop to iterate over indices of the batch of files, attach every line of results into a data frame or write into an output .csv file.

4. Start with small batches and trach which data file causes an error in the program, modify the code to be compatible with all data files

Technical details

1. Read files

Get the list of file names:

# Get the files names

File_Directory <- "D:/where_all_the_files_are/"# The directory of data files

setwd(File_Directory)

File_List <- list.files(pattern = "*.dbf$")2. Manage the enclosing environment inside the function

# The function that summarize all sub-functions looks like this:

getOutput <- function(file_name){

data_ori <- read.dbf(paste(File_Directory, file_name, sep="")) # input data file

# ... calculate all the output ... #

# for example,

test_result1 <- group_t_test(group)

# ... #

All_Output <- data.frame(test_result1, test_result2, ... all the output... , row.names ="") # no row names

return(All_Output)

}For example, this function is going to be a problem to use inside the parent function getOutput if "data_ori" is not passed to the function. Because group_t_test is in the global environment but the data file is not there.

# Using this function inside the parent function (getOutput) is a problem

group_t_test <- function(data_new){

t_test <- t.test(x = data_new$Var1, y = data_ori$Var2)

}group_t_test <- function(data_new, data){

t_test <- t.test(x = data_new$Var1, y = data$Var2)

}

# ...

Test_Output <- group_t_test(data_new, data_ori)3. Output batch results

I will produce the file with 1st line and then attach the rest (I guess this is not optimal, but easy to debug.)

# Output.csv is the file containing output

df <- getOutput(File_List[1])

write.table(df, "D:/somewhere/Output.csv",

col.names = TRUE, row.names = FALSE, append = FALSE, sep = ",")

# Include Col names (variable names), Exclude Row names

attachResult <- function(x){

print(x) # Print file name if processed successfully,

# If the loop stops somewhere we know which data file has problem

write.table(getOutput(x),"D:/somewhere/Output.csv",

col.names = FALSE, row.names = FALSE, append = TRUE, sep = ",")

# Use "append = T" to attach all the results)

}

# Process all the rest files (2: length(File_List))

lapply(File_List[-1], attachResult)Challenge

The main problem is definitely to modify the code to fit all the files. It is very much likely the batch files were produced by other programs (and by other people). The variables are organized in a similar way but there could be small differences in the format (e.g. %m/%d/%y and yyyy-mm-dd for one date variable) or the name of the variables.For example, one variable has several names in different data files: "Var_Name", "Var__Name", "Var_Name_". After some experiments, I think the easiest way was to clean all the variables' names and make them the same for every file:

# Use gsub ("group substitution") to and align the variables names

data_ori <- read.dbf(paste(File_Directory, file, sep=""))

names(data_ori) <- gsub("_", "" , x = names(data_ori))Visualization

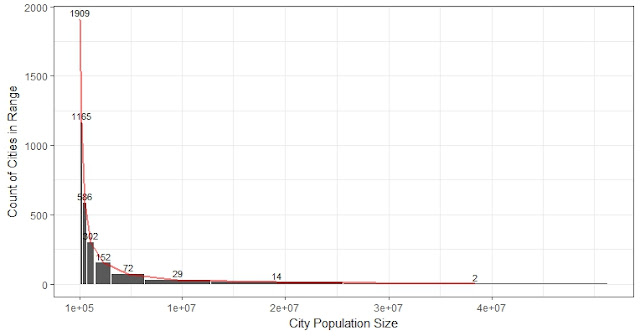

The outputs are some test results (confusion matrix, t-test, etc) for each city.1. For example, to compare the accuracy of two methods, we can compare their differences from the real mean and show for each city (Purple represents NYU, and brown represents another source:

(Done easily by excel 3D map using bubble chart)

2. For t-test results, we can use two layers of bubbles to compare the two groups. Let solid circle represent no significant difference (coded 1) and hollow one represent a significant difference (coded -1):

Adjust the size of the bubble can be helpful (in gif):

5/20

Comments